![]()

Questions about Replacing the FFIEC CAT

The FFIEC Cybersecurity Assessment Tool (CAT) was officially retired in August of 2025. You can view the full announcement regarding the sunset of the FFIEC CAT here: cat-sunset-statement-ffiec-letterhead.pdf

To support institutions in transitioning, UFS has partnered with the Cyber Risk Institute (CRI) to adopt the CRI Profile as a replacement for the FFIEC CAT. CRI, a not-for-profit coalition of financial institutions and trade associations, developed the Profile specifically for use by the financial services sector. Built on the National Institute of Standards and Technology (NIST) Cybersecurity Framework, the Profile is recognized by international supervisory and regulatory bodies and was one of two frameworks referenced in the FFIEC’s sunset announcement.

The CRI Profile uses a tier-based model to assess risk based on the potential impact an organization could have on the global, national, sector, or local markets in the event of a significant cybersecurity incident.

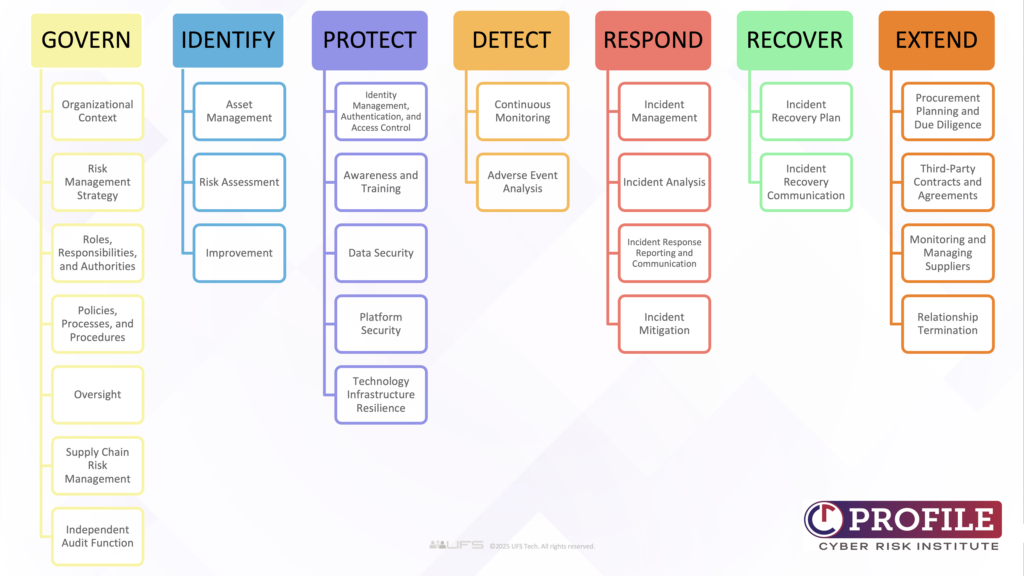

The Profile includes seven core Functions for assessing an organization’s cyber risk management program: Each function is further broken down into Categories (shown below) and Subcategories (not shown) which are designed to reflect an element of an effective cyber risk management program.

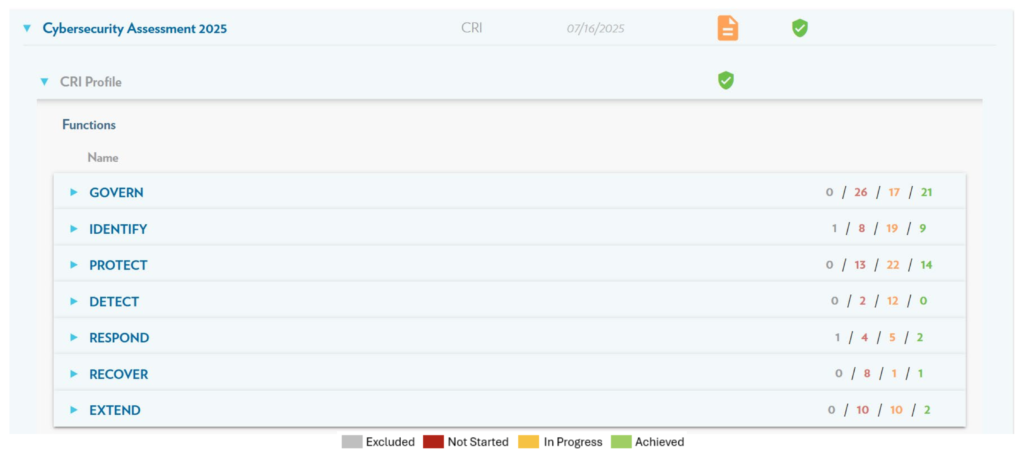

The CRI Profile is designed to be a standardized, repeatable assessment tool that financial institutions can use to measure their cybersecurity posture as well as comply with regulatory requirements. The UFS delivery model supplements the Profile with assistance from compliance experts during completion of the assessment, and informational, clear reporting to deliver the results of the assessment to stakeholders.

How It Works

Annually, a dedicated UFS Advisory/Compliance Specialist will guide you through the CRI Profile experience — from importing your previous FFIEC CAT responses into the ECAT Application to guiding you through the entire CRI Profile process.

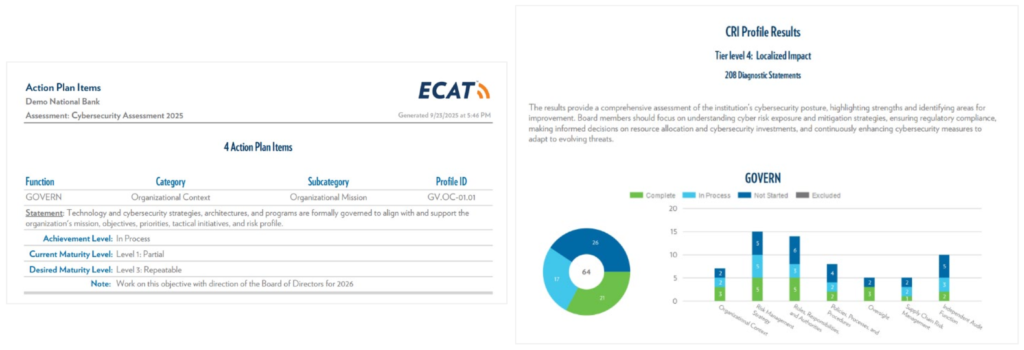

Along with the application, UFS has developed an action plan process, as well as supporting reports (examples shown below) to effectively communicate your cybersecurity preparedness to all stakeholders, from the institution’s Board or Directors to auditors and examiners.

If you are interested in learning more, please contact us at compliance@ufstech.com.

The current CAT was originally released by the FFIEC in 2015 and subsequently modified in 2017. Since then, all U.S. federal banking regulators have encouraged their supervised institutions to complete the assessment annually. In August 2024, the FFIEC announced that it would discontinue support for the CAT, removing it from its website effective August 31, 2025. The FFIEC cited the availability of new and updated government and industry resources that financial institutions can leverage to more effectively manage cybersecurity risks.

The FFIEC referenced several alternative resources:

- Government resources:

- NIST Cybersecurity Framework (CSF) v2.0

- Cybersecurity and Infrastructure Security Agency’s (CISA) Cybersecurity Performance Goals (CPGs)

- Industry resources:

- Cyber Risk Institute (CRI) Cyber Profile v2.0

- Center for Internet Security (CIS) Critical Security Controls

While the FFIEC is not endorsing any specific tool or framework, institutions[1] are encouraged to adopt established, standards-based approaches aligned with their risk profile and control environment. Institutions opting for custom-built frameworks may attract unnecessary regulatory scrutiny.

[1] The NCUA is encouraging Credit Unions to continue using the ACET.

While the FFIEC is not endorsing any specific tool or framework, banks are encouraged to adopt established, standards-based approaches aligned with their risk profile and control environment. Institutions opting for custom-built frameworks may attract unnecessary regulatory scrutiny.

The ideal replacement for the CAT for banks should meet all criteria below:

- Based on a widely recognized cybersecurity framework (e.g., NIST Cybersecurity Framework)

This ensures alignment with industry best practices, promotes consistency across institutions, and provides credibility with regulators and auditors. - Maintained and regularly updated by a committed and reputable organization

This keeps the framework current with emerging threats, regulatory changes, and evolving security standards, ensuring long-term relevance. - Tailored to specific challenges, regulatory expectations, and risk profiles of the financial industry

The framework should reflect the high-security standards and compliance obligations unique to financial institutions, including their exposure to cyber threats and customer data sensitivity. - Supports structured assessment, tracking, and reporting of cybersecurity posture, including assessment-to-assessment comparison reports

Financial institutions need the ability to evaluate their cybersecurity maturity over time, identify gaps, create an action plan for improvement, and demonstrate progress to stakeholders, including boards and regulators. - Allow for customization to meet the unique needs of individual institutions

Each financial institution has its own size, complexity, and risk appetite—the framework should be flexible enough to accommodate these differences without compromising structure or rigor.

Additional considerations:

- Consider partnering with subject matter experts to assist with the completion of the assessment and interpretation of the assessment results

Because all the alternative frameworks differ significantly from the FFIEC CAT, implementation can be resource-intensive, requiring substantial time, effort, and expertise both initially and on an ongoing basis. Developing a prescriptive, results-driven action plan also hinges on accurately interpreting assessment results. Engaging experts can ease the implementation and maximize long-term impact.

To help with your evaluation, below is a comparison chart, and you can also download the checklist.

Alternatives Comparison Chart

Framework |

Description |

Standards-based? |

Financial Industry Specific? |

| NIST Cybersecurity Framework (CSF) 2.0 | De facto standard | Yes | No |

| CISA Cybersecurity Performance Goals (CPG) | Applicable to critical infrastructure entities. Based on NIST 1.0 framework with addition of Governance function. | Yes, NIST v1.0* | No* |

| CRI Cyber Profile 2.0 | Based on NIST framework with additional Extend function and additional subcategories specific to financial industry. | Yes, NIST v2.0 | Yes |

| Center for Internet Security Critical Security Controls | Set of controls and best practices. Multi-standard mappings will eventually include financial industry standards. | Yes, NIST v2.0 | No |

*They anticipate releasing a financial sector-specific set of goals and updating to NIST CSF 2.0 at some point (estimated Winter 2025).

Once your bank has selected a preferred alternative, you need to develop a transition plan in advance of the CAT retirement in August 2025:

- Engage internal stakeholders (e.g., IT, compliance, risk management, and internal audit) to evaluate tool fit and implementation requirements.

- Transition to the framework and conduct a gap analysis comparing your most recent CAT assessment results with the results of your chosen framework.

- Document framework choice rationale and communicate changes to your examiners and board of directors to demonstrate that you’ve implemented a risk-based, coordinated, and well-governed approach.

- Follow these best practices when conducting assessments:Treat the assessment as a starting point: Use results to identify control gaps and define a roadmap toward your target cybersecurity maturity.Ensure reporting capability: Select a framework or tool that enables the production of clear, comprehensive reports for stakeholders, examiners, and the board.Expect ongoing updates: Choose frameworks supported by organizations committed to updating content to reflect evolving cyber threats and best practices.Prioritize flexibility: Institutions should be able to tailor the framework to their specific size, complexity, and risk profile.Be mindful of the transition process: The new framework should facilitate the mapping of your previous CAT assessment responses to the new framework to maintain assessment consistency, reduce duplication, and ease the transition.

By taking proactive steps to select and implement the right framework, you can ensure that your cybersecurity strategy remains robust, aligned with industry standards, and responsive to evolving threats.

![]()

Related Resources

Ask a Question, Get an Answer!

Ask a question and our compliance experts will email you back!