Thanks in part to social media, users today often don’t differentiate between work and non-work activities, and they certainly don’t want to have to carry multiple work/non-work devices to keep them connected. As a result, new multi-function, multi-purpose mobile devices are constantly being added to your secure financial institution network…and often in violation of your policies.

Most institutions have an IT Acquisition Policy, or something similar, that defines exactly how (and why) new technology is requested, acquired, implemented and maintained. The scope of the policy extends to all personnel who are approved to use network resources within the institution, and the IT Committee (or equivalent) is usually tasked with making the final purchasing decision. And although older policies may use language like “microcomputers”, and “PC’s”, the policy effectively covers all network connected devices, including the new generation of devices like smartphones and iPads. And managing risk always begins with the acquisition policy…before the devices are acquired.

Your policy may differ in the specific language, but it should contain the following basic elements required of all requests for new technology:

- Description of the specific hardware and software requested, along with an estimate of costs (note what type of vendor support is available).

- Description of the intended use or need for the item(s).

- Description of the cost vs. benefits of acquiring the requested item(s).

- Analysis of information security ramifications of requested item(s).

- Time frame required for purchase.

Most of these are pretty straightforward to answer, but what about bullet #4? Are you able to apply the same level of information security standards to these multifunctional devices as you are to your PC’s and laptops? Or does convenience trump security? This is where the provisions of your information security policy take over.

The usefulness of these always-on mobile devices is undeniable, and they have really bent the cost/benefit curve, but they have also re-drawn the security profile in many cases. The old adage is that a chain is only as strong as its weakest link, and in today’s IT infrastructure environment these devices are often the weak links in the security chain. So while your users have their feet on the accelerator of new technology adoption, the ISO (and the committee managing information security) needs to have both feet firmly on the brake unless they are willing to declare these devices as an exception to their security policy…which is definitely not recommended.

So how can you effectively manage these devices within the provisions your existing information security program, without compromising your overall security profile? It might be worth reviewing what the FFIEC has to say about security strategy:

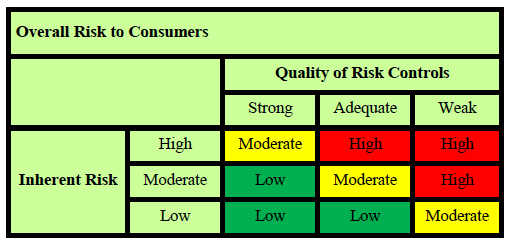

Security strategies include prevention, detection, and response, and all three are needed for a comprehensive and robust security framework. Typically, security strategies focus most resources on prevention. Prevention addresses the likelihood of harm. Detection and response are generally used to limit damage once a security breech has occurred. Weaknesses in prevention may be offset by strengths in detection and response.

Regulators expect you to treat all network devices the same, and clearly preventive risk management controls are preferred, but the fact is that many of the same well-established tools and techniques that are used for servers, PC’s and laptops are either not available, or not as mature in the smartphone/iPad world. Traditional tools such as patch management, anti-virus and remote access event log monitoring, and techniques such as multi-factor authentication and least permissions, are difficult if not impossible to apply to these devices. However there are still preventive controls you can, and should, implement.

First of all, only deploy remote devices to approved users (as required by your remote access policy), and require connectivity via approved, secure connections (i.e. 3G/4G, SSL, secure WiFi, etc.). Require both power-on and wake pass codes. Require approval for all applications and utilize some form of patch management (manual or automated) for the operating system and the applications. Encrypt all sensitive data in storage, and utilize anti-virus/ anti-spyware if available.

Because of the unavailability of preventive controls, maintaining your information security profile will likely rest on your compensating detective and corrective controls. Controls are somewhat limited in these areas as well, but include maintaining an up-to-date device inventory, and having tracking and remote wipe capabilities to limit damage if a security breach does occur.

But there is one more very important preventive control you can use, and this one is widely available, mature, and highly effective…employee training. Require your remote device users to undergo initial, and periodic, training on what your policies say they are (and aren’t) allowed to do with their devices. You should still conduct testing of the remote wipe capability, and spot check for unencrypted data and unauthorized applications, but most of all train (and retrain) your employees.

Fact 2 – According to Rep. Shelly Moore Capito (R-W.Va.), author of H.R. 3461, “The Dodd-Frank Act has added so many new regulations to financial institutions, it has helped boost a 31% projected growth in job opportunities for Compliance Officers.”

Fact 2 – According to Rep. Shelly Moore Capito (R-W.Va.), author of H.R. 3461, “The Dodd-Frank Act has added so many new regulations to financial institutions, it has helped boost a 31% projected growth in job opportunities for Compliance Officers.”