In the first update in over 10 years, the FFIEC just completely rewrote the definitive guidance on their expectations for managing information systems in financial institutions. This was widely expected, as the IT world has changed considerably since 2006.

There is much to unpack in this new handbook, starting with what appears to be a new approach to managing information security risk. The original 2006 handbook put the risk assessment process up front, essentially conflating risk assessment with risk management. But as I first mentioned almost 6 years ago, the risk assessment is only one step in risk management, and it’s not the first step. Before risk can be assessed you must identify the assets to be protected and the threats and vulnerabilities to those assets. Only then can you conduct a risk assessment. The new guidance uses a more traditional approach to risk management, correctly placing risk assessment in the second slot:

- Risk Identification

- Risk Measurement (aka risk assessment)

- Risk Mitigation, and

- Risk Monitoring and Reporting

This is a good change, and it is also identical to the risk management structure in the 2015 Management Handbook. Its also very consistent with the 4 phase process specified in the 2015 Business Continuity Handbook:

- Business Impact Analysis

- Risk Assessment

- Risk Management, and

- Risk Monitoring and Testing

Beyond that, here are a few additional observations (in no particular order):

More from Less:

- The new handbook is about 40% shorter, consisting of 98 pages as contrasted with 138 in the 2006 handbook.

…HOWEVER…

- The new guidance contains 412 references to the word “should”, as opposed to 341 references previously. This is significant, because compliance folks know that every occurrence of the word “should” in the guidance, generally translates to the word “will” in your policies and procedures. So the handbook is 40% shorter, but increases regulator expectations by 20%!

Cyber Focus:

- “…because of the frequency and severity of cyber attacks, the institution should place an increasing focus on cybersecurity controls, a key component of information security.” Cybersecurity is scattered throughout the new handbook, including an entire section.

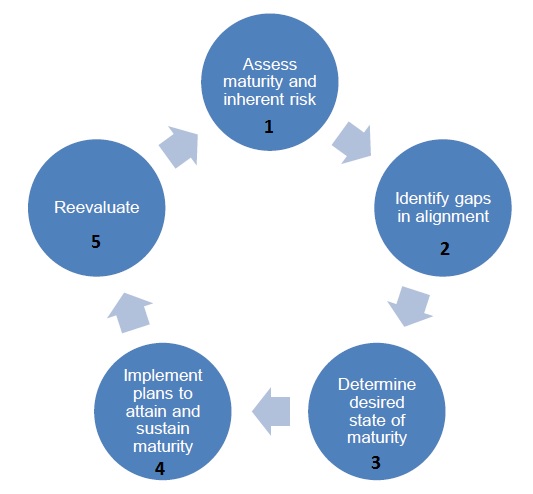

Assess Yourself:

- There are 17 separate references to “self-assessments”, increasing the importance of utilizing internal assessments to gauge the effectiveness of your risk management and control processes.

Take Your Own Medicine:

- Technology Service Providers to financial institutions will be held to the same set of standards:

- “Examiners should also use this booklet to evaluate the performance by third-party service providers, including technology service providers, of services on behalf of financial institutions.”

The Ripple Effect:

- The impact of this guidance will likely be quite significant, and will be felt across all IT areas. For example, the Control Maturity section of the Cybersecurity Assessment Tool contains 98 references and hyperlinks to specific pages in the 2006 Handbook. All of these are now invalid. I’m sure we can expect an updated assessment tool from the FFIEC at some point in the not-too-distant future. (Which will also necessitate changes to certain online tools!)

- The new FDIC IT Risk Examination procedures (InTREx) also contains several references to the IT Handbook, although they are not specific to any particular page.

Regarding InTREx, I was actually hoping that the new IT Handbook and the new FDIC exam procedures would be more closely coordinated, but perhaps that’s too much to ask at this point. In any case, the similarity between the 3 recently released Handbooks indicates increased standardization, and I think that is a good thing. We will continue to dissect this document and report observations as we find them. In the meantime, don’t hesitate to reach out with your own observations.

, and make sure you can get your hands on those items. Again, being able to provide the documentation may make all the difference in your final exam score.

, and make sure you can get your hands on those items. Again, being able to provide the documentation may make all the difference in your final exam score. .

.